|

Alok Shah I'm a student at the University of Pennsylvania, where I study Computer Science, Mathematics, and Electrical Engineering. Outside of class, I lead MLR@Penn, the undergraduate AI and ML research organization and community at Penn and serve on the Student Advisory Board for the Wharton AI & Analytics Initiative. In my free time, I enjoy historical documentaries, geography trivia, trying new food trucks and restaurants around Philly, and burning off those calories by playing pickup soccer. |

|

Projects

I play simple tricks on machine learning models to study their training dynamics. I tend to interpret the phenomena which emerge from these experiments with a lens informed by theory. I'm interested in applying such insights to problems in robotics and language. |

|

Dropout, Johnson-Lindenstrauss, Low-Rank Bias, and Generalization

Alok Shah*, Mohul Aggarwal*, Khush Gupta CIS 6770 Final Project: An alternate perspective on the generalizing behavior of dropout through the lens of random projections and low-rank bias |

|

Language Modeling With Learned Meta-Tokens

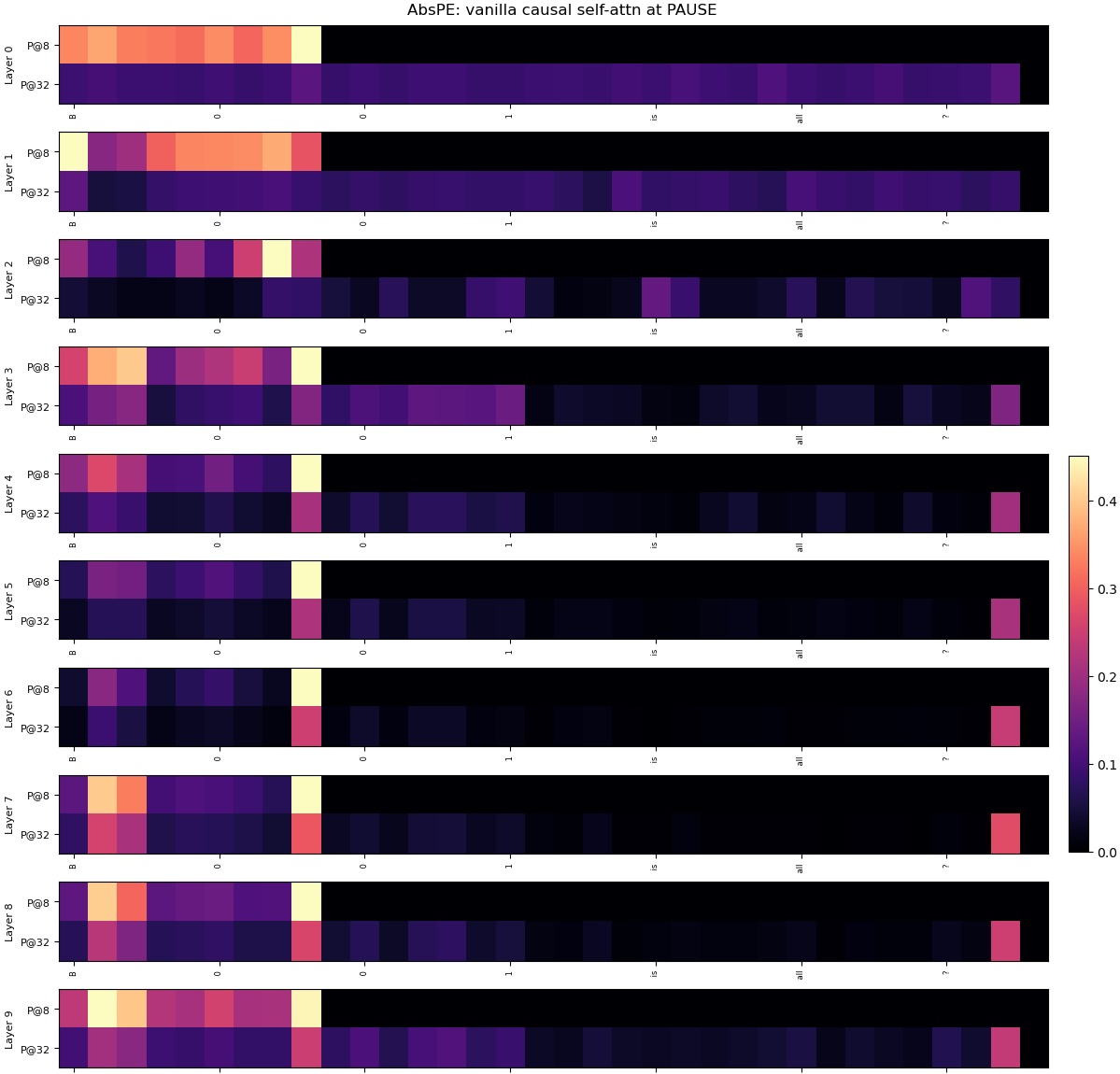

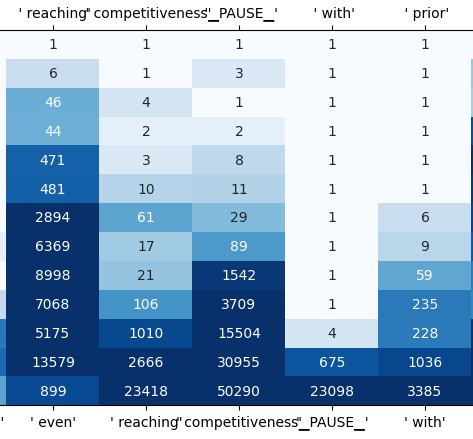

Alok Shah*, Khush Gupta*, Keshav Ramji, Pratik Chaudhari ICML LCFM, 2025 Showed that meta-tokens provably improve context propagation in language models across extreme lengths via rate-distortion analysis and positional ablations |

|

Investigating Language Model Dynamics using Meta-Tokens

Alok Shah*, Khush Gupta*, Keshav Ramji, Vedant Gaur NeurIPS ATTRIB, 2024 ESE 5460 Final Project: Explored how to coerce communication between token-level checkpoints for more interpretable, capable models |

|

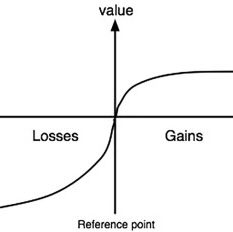

Modeling Human Behavior Without Humans: Prospect Theoretic Multi-Agent RL

Sheyan Lalmohammed*, Khush Gupta*, Alok Shah*, Keshav Ramji, ICML MAS, 2025 STAT 4830 Final Project: A cool way to induce human-like risk-averse behavior between multiple agents by warping the reward landscape in Choquet integrals in accordance with Cumulative Prospect Theory |

|

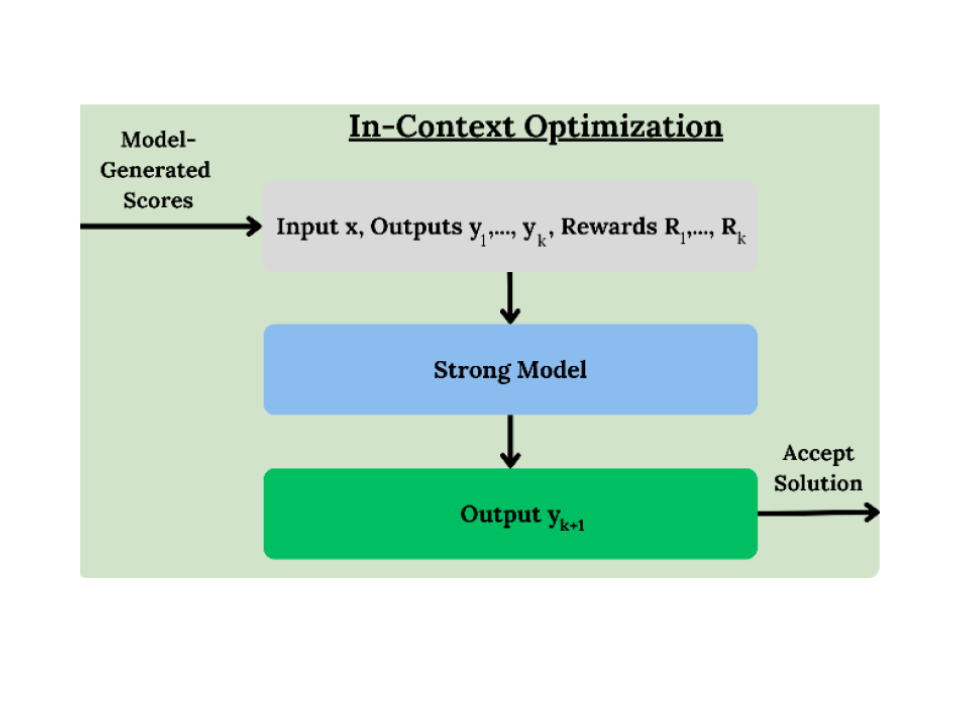

Weak-to-Strong In-Context Optimization of Language Model Reasoning

Keshav Ramji*, Alok Shah*, Vedant Gaur*, Khush Gupta* NeurIPS ATTRIB, 2024 Developed in-context optimization method leveraging weak learners to improve reasoning in strong large language models without additional finetuning |

|

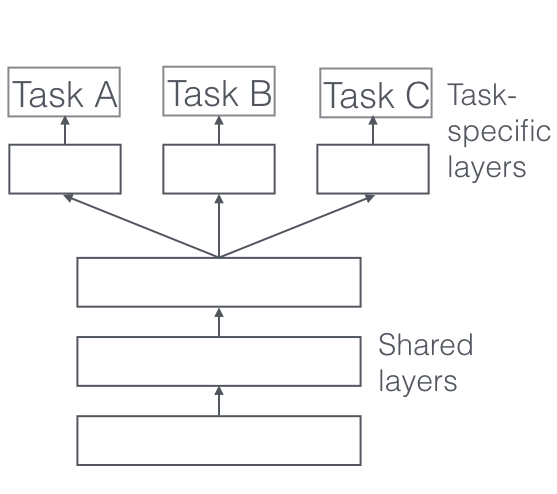

A Note on Multitask Learning with Task-Adaptive Priors

Alok Shah , Sidhant Srivastava, Tara Kapoor CIS 7000 Final Project: By extracting task dependent statistics, we learn a prior that provably nudges the model toward representations that generalize better to new tasks. |

|

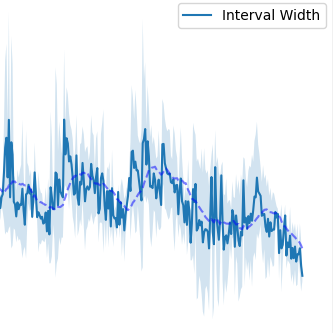

Conformal Actor-Critic: Distriution-Free Uncertainty Quantification for Offline RL

Alok Shah, Nikhil Kumar, Khush Gupta, Mohul Aggarwal CIS 6200 Final Project: Integrated conformal prediction into offline reinforcement learning, providing statistically robust uncertainty quantification to curb overestimation bias of Q-values |

|

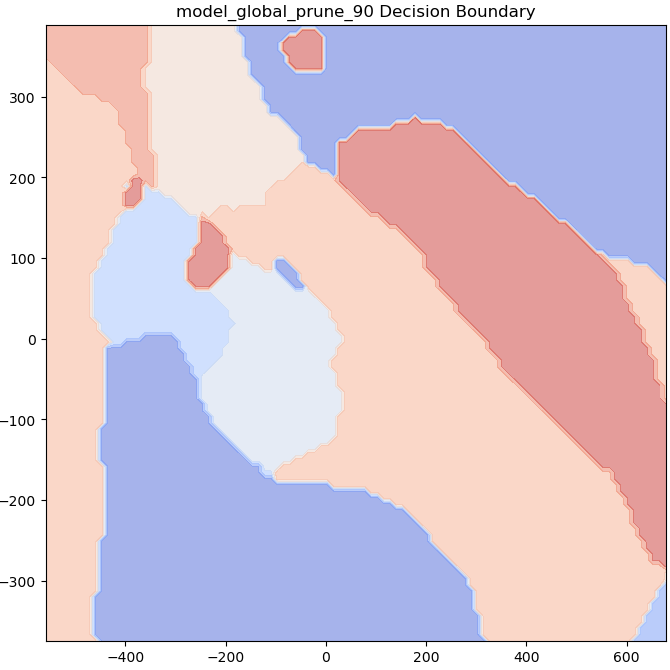

Deep Compression with Adversarial Robustness via Decision Boundary Smoothing

Alok Shah, Michael Shao ESE 5390 Final Project: Smoothed adversarial retraining during compression produces compact models with stronger robustness |

|

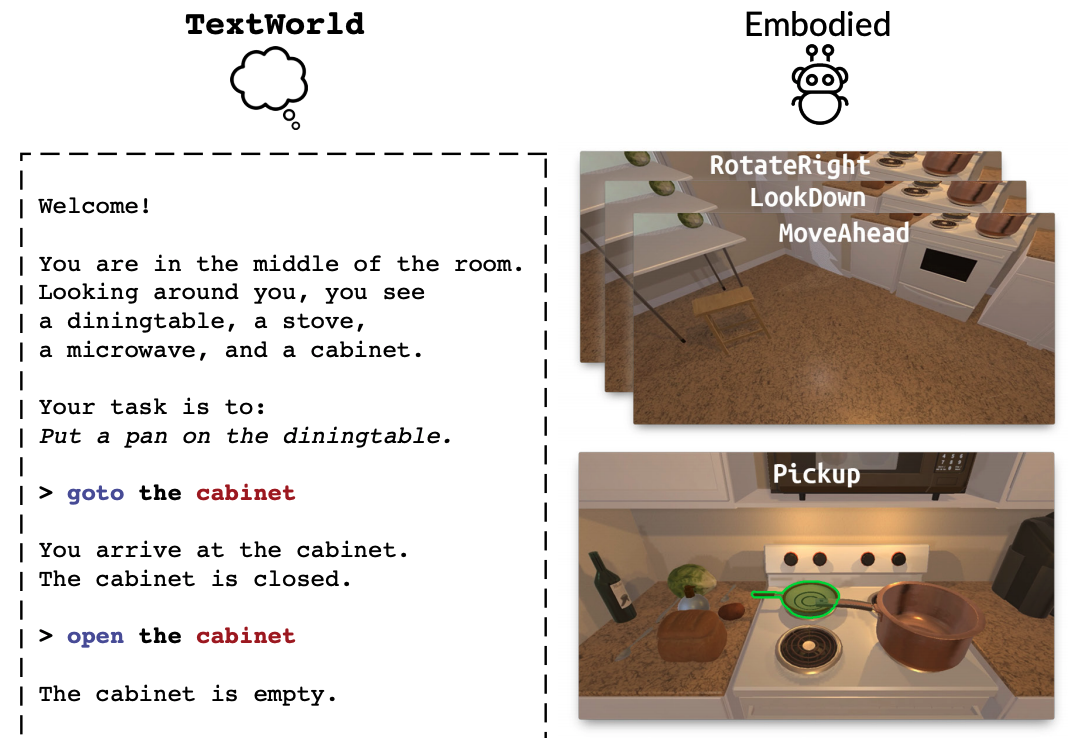

AlfLLM: Limitations on LLMs as Reward Function Surrogates

Alexander Kyimpopkin, Alok Shah, Dominic Olaguera-Delogu ESE 6500 Final Project: Investigated the efficacy of using large language models as surrogate reward functions for reinforcement learning in the ALFWorld environment. |

Selected Coursework

* denotes graduate level; ** denotes doctoral level

- Honors Analysis in Several Variables

- Discrete Mathematics

- Linguistics

- Data Structures and Algorithms

- Advanced Linear Algebra*

- Probability*

- Computer Systems

- Machine Learning*

- Real Analysis

- Topology*

- Differential Geometry*

- Operating System and Design

- Ethical Algorithm Design*

- Convex Optimization**

- State Estimation, Control, and Reinforcement Learning**

- Deep Learning*

- Automata, Computability and Complexity

- Analysis of Algorithms*

- Uncertainty Quantification**

- Learning for Dynamics and Control**

- Hardware/Software Co-Design for Machine Learning*

- Stability in Optimization and Statistics**

- Bayesian Optimization**

- Randomized Algorithms and Numerical Linear Algebra**

- Numerical Optimization for Data Science and Machine Learning

- Statistical Topics in Large Language Models**

- Statistical Mechanics**

- General-Purpose GPU Programming Architecture**

- Physical Intelligence**

- Human-Computer Interaction*

- Acceleration and Hedging in Optimization**

- Senior Design

Teaching

I enjoy teaching and have served on staff for several courses.

* denotes graduate level; ** denotes doctoral level; ^ denotes head teaching assistant

- Mathematics of Machine Learning (Eric Wong)

- Machine Learning*^ (Surbhi Goel, Eric Wong, Jake Gardner, Lyle Ungar)

- Statistics for Data Science** (Hamed Hassani)

- Deep Learning* (Pratik Chaudhari)

- State Estimation, Control, and Reinforcement Learning** (Pratik Chaudhari)

- Convex Optimization** (Nikolai Matni)